California Pioneers Digital Safety with New Social Media and AI Laws

California enacts laws for social media warnings and AI chatbot safeguards, setting a precedent in digital safety and accountability.

California Pioneers Digital Safety with New Social Media and AI Laws

California Governor Gavin Newsom has signed into law two groundbreaking bills aimed at regulating social media platforms and artificial intelligence (AI) chatbots. These laws represent a significant step in digital content oversight and AI safety, requiring social media companies to display warning labels about potential health risks and establishing safety measures for AI chatbots, particularly to protect vulnerable populations such as children. Officially enacted in October 2025, this legislation positions California as a leader in addressing the challenges posed by digital technologies on public health and safety.

Background: Rising Concerns Over Social Media and AI

As social media platforms have become ubiquitous, concerns over their impact on mental health, misinformation dissemination, and user safety have intensified. Similarly, the rapid advancement of AI chatbots—tools capable of simulating human conversation—has raised alarms about misinformation, manipulation, and potential harm, especially to minors. Lawmakers have called for increased regulation to mitigate these risks without stifling innovation.

Governor Newsom’s recent legislative actions respond directly to these challenges, following months of debate and advocacy by health experts, consumer groups, and technology regulators.

Key Provisions of the New Laws

1. Mandatory Social Media Warning Labels

The first bill requires social media companies operating in California to display clear warning labels that inform users about the “profound” mental health risks associated with platform use. These labels must be prominently displayed on platforms known to have adverse effects on emotional well-being, such as Instagram, TikTok, and Facebook.

- The warnings specifically highlight risks related to anxiety, depression, and addiction.

- Platforms must provide users with resources for mental health support alongside the warning labels.

- Compliance deadlines are set within six months, with penalties for companies failing to implement the labels.

2. AI Chatbot Safety and Child Protection Measures

The second law focuses on AI chatbots, particularly those accessible by children. It requires companies to implement safeguards against harmful or misleading chatbot interactions, including:

- Mandatory disclaimers when users interact with AI, clarifying that the chatbot is not human.

- Restrictions on data collection from minors and enhanced parental control features.

- Regular audits by independent experts to ensure AI responses do not promote misinformation or harmful content.

- A requirement for companies to maintain transparency around AI training data and algorithms.

Senator Steve Padilla, one of the bill’s sponsors, described the legislation as the “first-in-the-nation comprehensive AI chatbot safeguard law,” emphasizing its role as a model for other states and the federal government.

Industry and Expert Reactions

The legislation has drawn mixed reactions from industry stakeholders and experts:

- Supporters, including mental health advocates and child welfare organizations, praised the laws as necessary protections in an era dominated by digital and AI technologies. They argue the laws set essential standards to hold tech companies accountable for their platforms’ societal impact.

- Critics from the tech industry warn that the regulations could stifle innovation and impose costly compliance requirements, especially for startups. Some argue that warning labels may not effectively change user behavior and could lead to legal ambiguities over content moderation responsibilities.

- Legal experts note the potential for these laws to spark nationwide debates on the balance between regulation, free speech, and technological advancement.

Context and Implications: A Blueprint for Digital Safety

California’s new laws come amid a broader wave of regulatory efforts globally to address the risks posed by social media and AI technologies. The state’s pioneering approach could serve as a blueprint for other jurisdictions seeking to impose more stringent digital safety requirements.

- By mandating health-related warnings on social media, California acknowledges the growing evidence linking digital platforms to mental health issues, especially among teenagers.

- The AI chatbot safeguards reflect rising concerns over AI accountability and transparency, especially as chatbots become integrated into education, healthcare, and customer service.

- These laws may trigger technology companies to reevaluate their design and content policies, potentially accelerating the adoption of safer, more ethical AI and social media practices.

In the coming months, the effectiveness of these regulations will be closely monitored by policymakers, industry leaders, and advocacy groups nationwide. The enforcement of these laws and the response from tech companies will likely shape the future discourse on digital governance.

Visual Elements

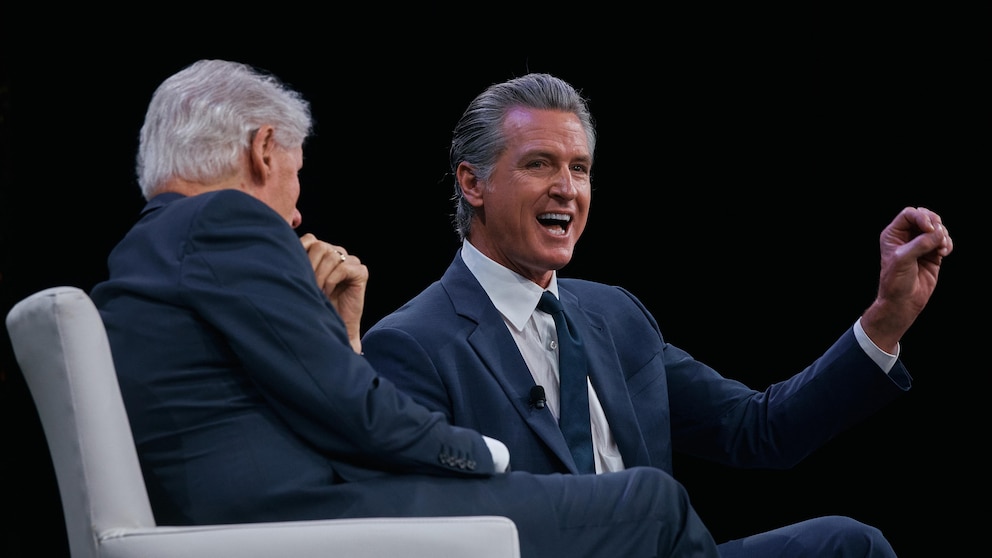

- Official photo of Governor Gavin Newsom signing the bills, highlighting the legislative moment.

- Screenshots from social media platforms demonstrating prototype warning labels.

- Infographics illustrating AI chatbot interaction safeguards and user protections.

- Portrait of Senator Steve Padilla, key proponent of the AI chatbot bill.

Conclusion

Governor Gavin Newsom’s signing of laws requiring social media warning labels and AI chatbot safeguards marks a historic move in regulating digital technologies to protect public health and safety. As the first state in the US to enact such comprehensive measures, California sets a precedent for balancing innovation with responsibility. These laws underscore the growing recognition that technology companies must be accountable for the societal impact of their platforms—particularly on vulnerable users such as children and teenagers. The coming months will reveal how these regulations influence industry practices and whether other states follow California’s lead.