ChatGPT Brings Voice Mode to Main Interface, Expanding Conversational AI Access

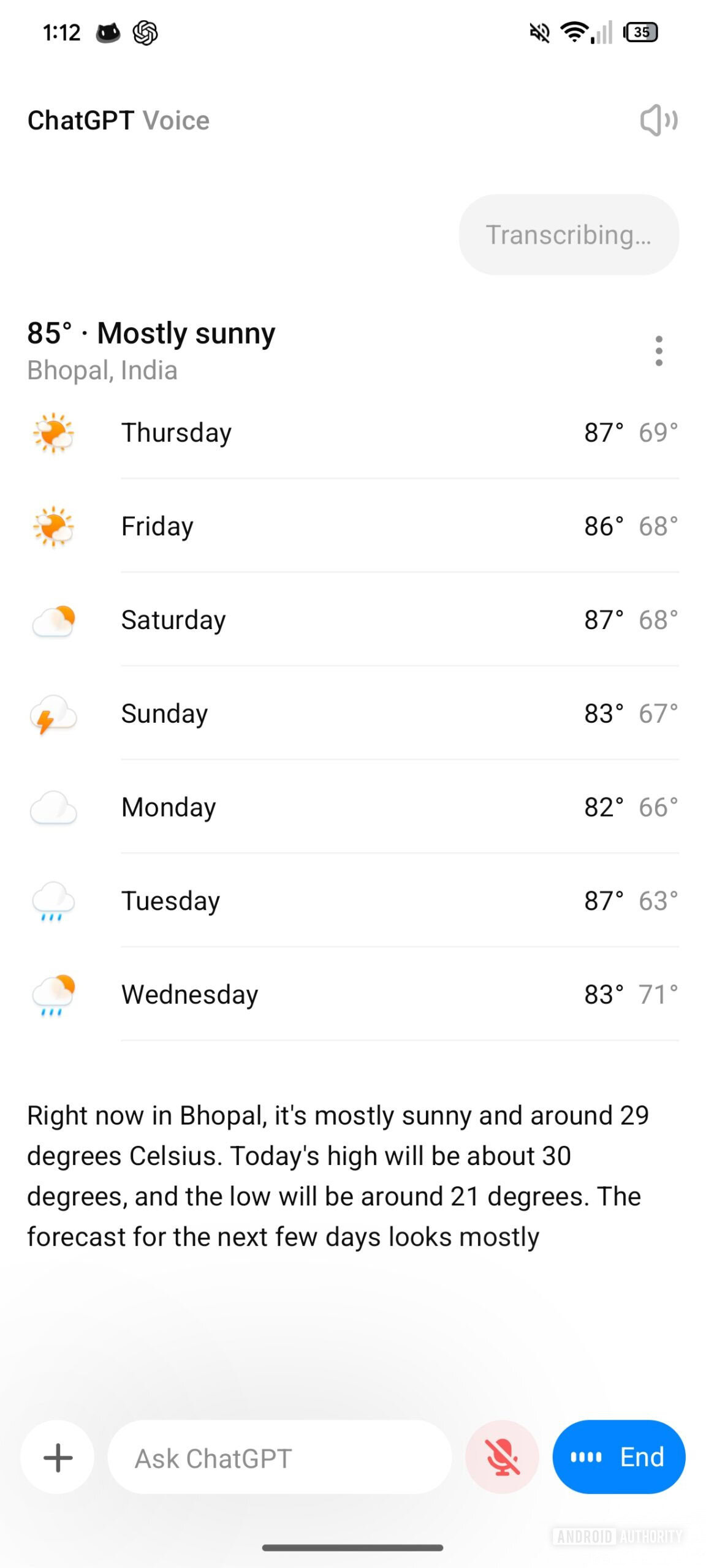

OpenAI has integrated voice mode directly into ChatGPT's main interface, making voice-based conversations more accessible to users across desktop and mobile platforms. The feature enhances user interaction by enabling natural spoken dialogue without requiring separate applications or menu navigation.

ChatGPT Brings Voice Mode to Main Interface, Expanding Conversational AI Access

OpenAI has integrated voice mode directly into ChatGPT's main interface, streamlining access to voice-based conversations and marking a significant step toward more natural human-computer interaction. The feature, previously available through separate pathways, is now seamlessly embedded within the primary chat environment, allowing users to switch between text and voice input without friction.

Enhanced User Experience Through Integration

The integration of voice mode into the main interface represents a strategic move to democratize voice-based AI interaction. Rather than requiring users to navigate through settings or separate applications, the voice feature is now immediately accessible from the core ChatGPT interface. This design choice reduces friction and encourages broader adoption of voice conversations, particularly among users who prefer spoken dialogue or require accessibility accommodations.

The voice mode supports natural language processing with improved responsiveness, allowing users to engage in real-time conversations with the AI. The feature maintains context across multiple exchanges, enabling coherent multi-turn dialogues that feel more like conversations with a human assistant than traditional command-response interactions.

Technical Implementation and Accessibility

The main interface integration reflects OpenAI's commitment to accessibility standards. Voice mode now functions across desktop and mobile platforms, with platform-specific optimizations ensuring consistent performance. Users can initiate voice conversations with a single tap or click, eliminating the need for complex navigation sequences.

The implementation includes:

- Real-time audio processing for immediate response generation

- Cross-platform synchronization maintaining conversation history across devices

- Contextual switching between text and voice within the same session

- Accessibility features supporting users with visual or motor impairments

Broader Implications for AI Interaction

This integration signals OpenAI's strategic direction toward conversational interfaces as the primary mode of human-AI interaction. Voice-based AI has demonstrated significant user engagement potential, particularly in scenarios where typing is impractical—while driving, during multitasking, or in hands-free environments.

The feature also addresses a critical gap in accessibility. Users with visual impairments, dyslexia, or motor control challenges benefit substantially from voice-first interaction models. By placing voice mode in the main interface rather than relegating it to secondary menus, OpenAI acknowledges voice as a primary interaction method rather than an optional feature.

Market Context and Competitive Positioning

Voice capabilities have become increasingly important across the AI landscape. As competitors integrate voice features into their offerings, OpenAI's decision to prioritize voice mode accessibility demonstrates responsiveness to market demands and user preferences. The main interface integration positions ChatGPT competitively against other conversational AI platforms that may still require additional steps to access voice functionality.

The move also reflects broader industry trends toward multimodal AI systems that seamlessly blend text, voice, and potentially other input methods. Users increasingly expect AI assistants to understand and respond across multiple modalities without requiring explicit mode selection.

Implementation Considerations

Users transitioning to voice mode will notice improved latency and more natural response patterns. The system processes spoken input with reduced delay, creating a more conversational experience. Error correction and clarification requests are handled more intuitively, with the AI able to ask for clarification when needed rather than making assumptions about user intent.

The integration maintains robust privacy controls, with users retaining full transparency over when voice data is being processed and stored. OpenAI's implementation includes options to disable voice features and clear conversation history as needed.

Key Takeaways

The integration of voice mode into ChatGPT's main interface represents a meaningful enhancement to user accessibility and interaction quality. By removing friction from voice-based conversations, OpenAI continues expanding the accessibility and usability of its flagship AI platform. This development underscores the industry-wide shift toward voice as a primary interface for conversational AI systems.

Key Sources: Android Authority reporting on ChatGPT voice mode integration; OpenAI product announcements regarding Advanced Voice Mode deployment; Testing Catalog documentation on ChatGPT desktop voice functionality.