Nvidia Claims Technological Edge Over Google's AI Chips, Asserting Generation-Ahead Performance

Nvidia has positioned its GPU architecture as significantly more advanced than Google's custom AI processors, claiming a full generation advantage in computational capability and efficiency for large language models and enterprise AI workloads.

Nvidia's GPU Dominance in the AI Chip Market

Nvidia has made bold claims about its technological superiority over Google's custom-designed AI chips, asserting that its GPU architecture maintains a generational lead in performance and capability. This assertion comes as the competition for AI infrastructure intensifies, with major cloud providers and enterprises seeking the most efficient and powerful processors for training and deploying large language models.

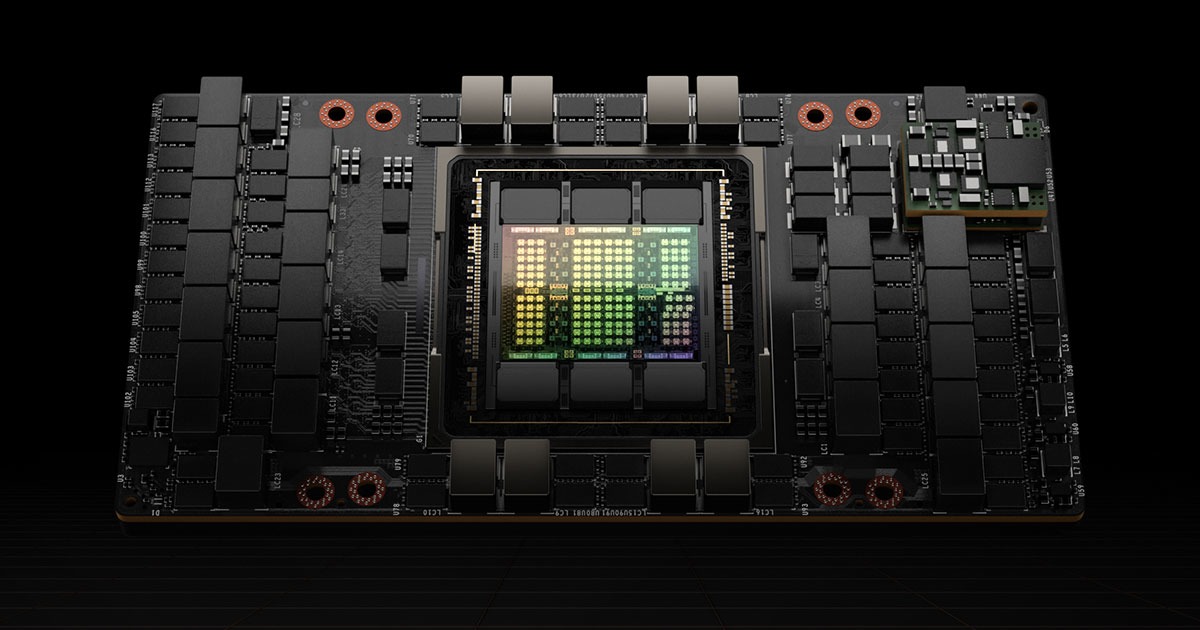

The company's positioning reflects the current market reality where Nvidia's H100 and newer architectures have become the de facto standard for AI workloads across the industry. While Google has invested heavily in developing its own Tensor Processing Units (TPUs) optimized for specific machine learning tasks, Nvidia argues that its general-purpose GPU design offers superior flexibility and raw computational power.

Technical Architecture and Performance Claims

Nvidia's argument centers on several key technical differentiators:

- Generalized computing capability: Unlike Google's TPUs, which are optimized for specific tensor operations, Nvidia's GPUs maintain broader programmability and compatibility with existing software ecosystems

- Memory bandwidth and throughput: The H100 architecture delivers substantial improvements in memory access patterns critical for transformer-based models

- Ecosystem maturity: Nvidia's CUDA platform has accumulated years of optimization and third-party support, creating a significant software moat

The company contends that these factors combine to provide a meaningful performance advantage when running contemporary AI inference and training tasks. Nvidia's claims suggest that its architecture can deliver superior results per watt of power consumed—a critical metric for data center operators managing operational costs.

Market Context and Competitive Positioning

Google's TPU strategy represents a different approach to AI infrastructure. Rather than competing in the general-purpose GPU market, Google has focused on optimizing processors for its own internal workloads and cloud customers running specific frameworks like TensorFlow. This vertical integration strategy has proven effective for Google's own AI services but has limited adoption outside the Google Cloud ecosystem.

Nvidia's broader market penetration gives it significant leverage. The company's GPUs are available across multiple cloud providers—AWS, Microsoft Azure, and others—as well as through direct enterprise purchases. This ubiquity has created network effects that reinforce Nvidia's market position.

Industry Implications

The competitive claims between Nvidia and Google reflect deeper questions about the future of AI infrastructure:

- Specialization vs. generalization: Whether purpose-built chips will eventually outperform general-purpose GPUs for AI workloads

- Ecosystem lock-in: How software compatibility and developer familiarity influence hardware adoption decisions

- Cost efficiency: Whether custom silicon can achieve better performance-per-dollar than established GPU architectures

Nvidia's assertion of a generational advantage, if substantiated through independent benchmarks, would suggest that the company's current market dominance is likely to persist in the near term. However, the rapid pace of innovation in AI chip design means that competitive advantages can erode quickly.

Key Sources

- Nvidia's official technical documentation on H100 GPU architecture and performance specifications

- Industry analysis of GPU vs. TPU performance characteristics in contemporary AI workloads

- Cloud provider benchmarking data comparing different AI accelerator options

The competitive dynamics between Nvidia and Google will likely shape enterprise AI infrastructure decisions for the coming years, with performance claims requiring independent validation as both companies continue advancing their respective technologies.