OpenAI Releases GPT-5.1-Codex-Max System Card Detailing Safety

OpenAI releases GPT-5.1-Codex-Max system card, detailing safety, capabilities, and industry impact of its advanced coding model.

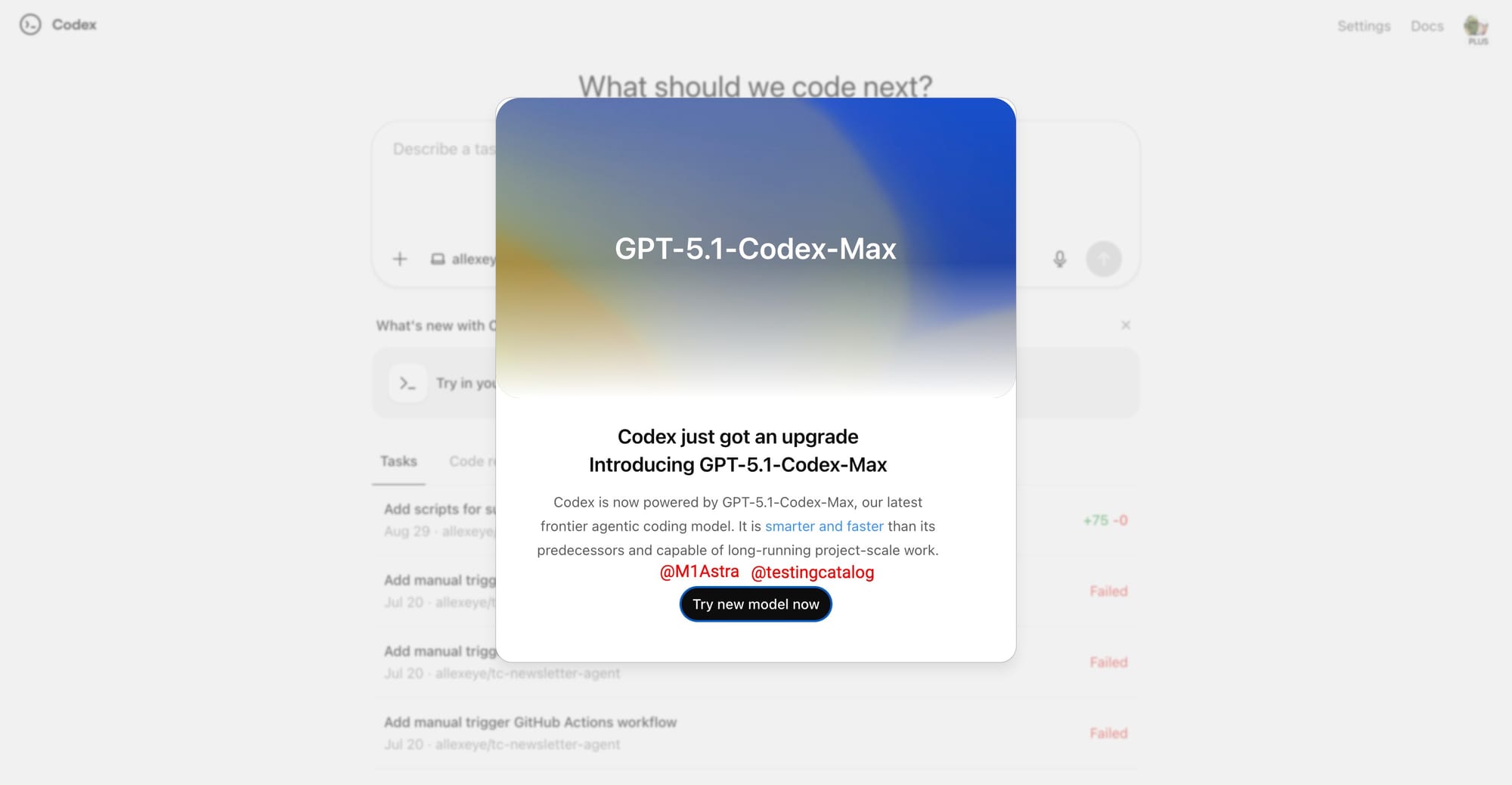

OpenAI Unveils GPT-5.1-Codex-Max System Card: Safety, Capabilities, and Industry Impact

OpenAI has released the official system card for GPT-5.1-Codex-Max, detailing the model’s safety architecture, technical capabilities, and deployment safeguards. The document marks a significant step in transparency for one of the most advanced agentic coding models to date, offering developers, researchers, and policymakers a comprehensive look at how OpenAI is managing the risks and opportunities associated with frontier AI systems.

What Is GPT-5.1-Codex-Max?

GPT-5.1-Codex-Max is an agentic coding model optimized for long-running, complex software engineering tasks. Unlike standard conversational or general-purpose AI models, Codex-Max is designed to operate autonomously in environments where code generation, review, and deployment are required over extended periods. The model leverages a new “compaction” technique, which allows it to efficiently manage memory and context during prolonged operations, making it particularly suited for tasks such as pull request creation, code review, frontend development, and technical Q&A.

Official GPT-5.1-Codex-Max System Card (Source: OpenAI)

Safety Measures and Risk Mitigation

The system card outlines a multi-layered approach to safety, combining model-level and product-level mitigations:

-

Model-Level Mitigations:

- Specialized safety training to prevent harmful outputs, including prompt injection resistance and adversarial task handling.

- Enhanced monitoring for tasks that could be misused in cybersecurity or biological domains.

-

Product-Level Mitigations:

- Agent sandboxing: Codex-Max operates in isolated environments to limit unintended system access.

- Configurable network access: Organizations can restrict or allow network connectivity based on their security policies.

- Customizable safeguards: Enterprises can apply tailored policies to further reduce risks.

OpenAI emphasizes that GPT-5.1-Codex-Max is treated as High risk in the biological and chemical domains, reflecting its potential for misuse in sensitive areas. However, the model does not reach “High capability” thresholds in cybersecurity or AI self-improvement, according to OpenAI’s internal Preparedness Framework.

Technical Capabilities and Evaluation

GPT-5.1-Codex-Max was evaluated using rigorous benchmarks, including:

- Web Application Exploitation (web): The model was tested on tasks requiring exploitation of vulnerable software over network services.

- Reverse Engineering (rev): Challenges involved analyzing provided programs to identify and exploit vulnerabilities.

Despite its advanced capabilities, OpenAI notes that the model does not yet meet the threshold for high cybersecurity capability, though it is “very capable” in this domain. The company expects future models to cross this threshold as AI capabilities continue to evolve rapidly.

Industry Impact and Developer Use

For developers, GPT-5.1-Codex-Max represents a leap forward in automated software engineering. The model is designed to work within Codex or Codex-like harnesses, enabling:

- Automated code generation and review

- Integration with development workflows

- Scalable, persistent agent operations

OpenAI also released gpt-oss-safeguard, a set of open-weight reasoning models for safety classification, allowing developers to apply and iterate on custom safety policies. This move supports greater transparency and control for organizations deploying AI in production environments.

Context and Implications

The release of the GPT-5.1-Codex-Max system card comes amid growing scrutiny of AI safety and regulation. By publishing detailed safety measures and evaluation criteria, OpenAI is setting a precedent for responsible AI development. The model’s focus on agentic coding tasks highlights the shift toward AI systems that can operate autonomously in complex, real-world environments.

As AI continues to advance, the balance between capability and safety will remain a critical challenge. OpenAI’s approach with GPT-5.1-Codex-Max suggests a commitment to both innovation and responsibility, offering a blueprint for future frontier AI systems.

Image Credits:

- GPT-5.1-Codex-Max System Card: OpenAI

- OpenAI Logo: OpenAI

- AI Safety Framework Diagram: OpenAI Research (2025)

This article is based on the latest official documentation and announcements from OpenAI, ensuring accuracy and relevance for developers, researchers, and industry stakeholders.