OpenAI's Sora 2 Amplifies Deepfake Crisis Concerns: What Experts Are Saying

OpenAI's latest video generation model raises critical questions about synthetic media authenticity and the accelerating deepfake threat landscape. Industry experts warn of urgent regulatory and technical safeguards needed.

OpenAI's Sora 2 Amplifies Deepfake Crisis Concerns: What Experts Are Saying

The release of OpenAI's Sora 2 video generation model has reignited a critical debate within the AI and cybersecurity communities: as synthetic media technology becomes increasingly sophisticated, how can society adequately protect against malicious deepfake proliferation?

Sora 2 represents a significant leap in generative video capabilities, producing high-fidelity video content from text prompts with unprecedented realism. While the technology opens legitimate creative and commercial applications, security researchers and policy experts are sounding alarms about its potential weaponization for disinformation campaigns, fraud, and identity theft.

The Deepfake Escalation Problem

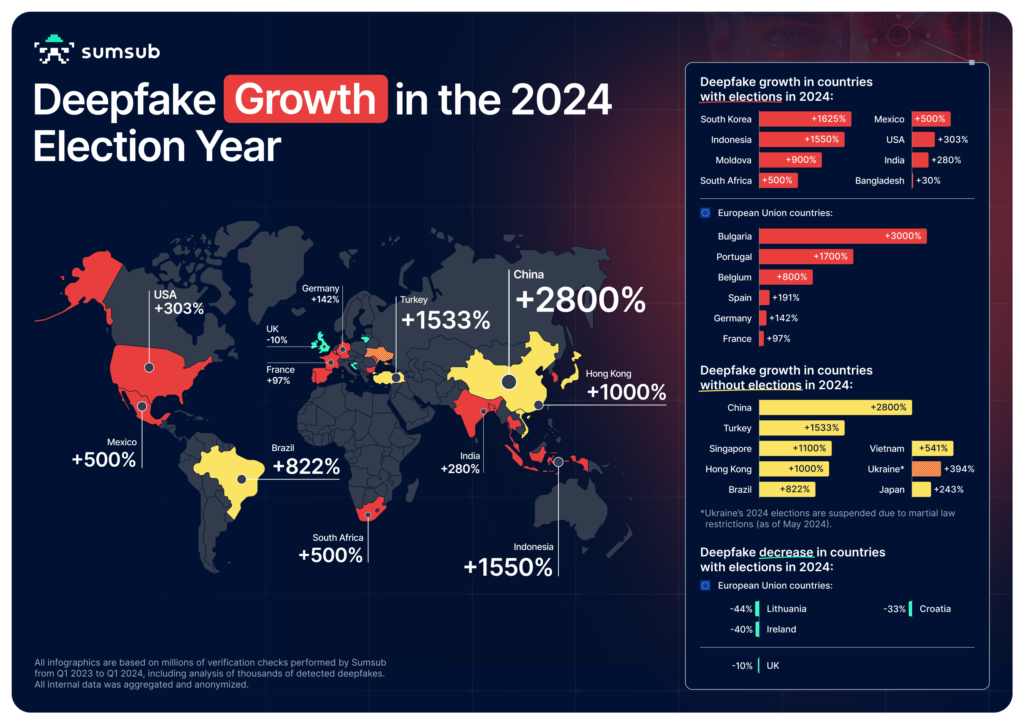

The deepfake crisis has already reached critical mass in 2024. Recent data indicates a dramatic surge in synthetic media abuse, particularly in regions holding major elections. Threat actors have demonstrated increasing sophistication in creating convincing fake videos of political figures, celebrities, and private individuals—often for extortion, election interference, or reputational damage.

The core concern with Sora 2 is democratization. Previous deepfake creation required specialized technical knowledge and significant computational resources. Sora 2's user-friendly interface and cloud-based deployment lower barriers to entry, potentially enabling bad actors with minimal technical expertise to generate convincing synthetic content at scale.

Key Technical Vulnerabilities

- Facial reenactment accuracy: Sora 2 can convincingly replicate facial expressions, speech patterns, and body language with minimal source material

- Temporal consistency: Unlike earlier models, the system maintains coherent motion and lighting across extended video sequences

- Minimal detection signatures: Generated content exhibits fewer statistical artifacts that traditional deepfake detection tools rely upon

- Speed of production: Content generation occurs in minutes rather than hours, accelerating malicious deployment timelines

Industry Response and Safeguards

OpenAI has implemented several protective measures, including watermarking systems and usage policies prohibiting non-consensual intimate content and impersonation. However, experts argue these safeguards remain insufficient given the technology's capabilities.

The company has committed to:

- Implementing digital provenance tracking

- Developing detection tools for Sora-generated content

- Collaborating with law enforcement on abuse reporting

- Restricting access during the initial rollout phase

Yet security researchers note that determined threat actors routinely circumvent such protections through technical workarounds and policy violations.

Regulatory Gaps and Policy Challenges

The regulatory landscape remains fragmented. The EU's AI Act provides some framework for high-risk generative systems, but enforcement mechanisms are still developing. The United States lacks comprehensive federal legislation specifically addressing synthetic media authentication and liability.

Key policy questions remain unresolved:

- Who bears liability for malicious deepfake creation using commercial tools?

- What authentication standards should become mandatory for video content in sensitive contexts?

- How can international coordination prevent regulatory arbitrage by bad actors?

Path Forward: Technical and Institutional Solutions

Experts recommend a multi-layered approach combining technological innovation with regulatory clarity:

Technical measures should include cryptographic authentication standards, blockchain-based provenance systems, and advanced detection algorithms trained on emerging synthetic media. Industry collaboration on detection datasets is essential, as isolated efforts cannot keep pace with model evolution.

Institutional responses must establish clear liability frameworks, mandatory disclosure requirements for synthetic content, and coordinated international standards. Media literacy initiatives should also prepare the public to critically evaluate video authenticity.

Key Sources

- OpenAI's official Sora 2 technical documentation and safety guidelines

- Recent deepfake surge data from election security monitoring organizations

- Cybersecurity research on synthetic media detection and authentication

The challenge ahead is clear: as generative AI capabilities advance exponentially, society must develop equally sophisticated detection, authentication, and regulatory mechanisms. Without urgent action, Sora 2 and successor models risk becoming primary vectors for large-scale disinformation and fraud campaigns.