Safeguarding Democracy: Addressing AI's Emerging Threats

AI's rapid growth poses threats to democracy. Efforts to mitigate these dangers focus on misinformation, manipulation, and governance strategies.

Safeguarding Democracy: Addressing AI's Emerging Threats

Artificial intelligence (AI) is reshaping societies globally, but its rapid growth poses significant threats to democratic institutions. In 2025, efforts to mitigate these dangers have intensified, with researchers, policymakers, and civil society focusing on AI-driven misinformation, manipulation, and authoritarian uses, alongside strategies to govern AI while preserving democratic values.

AI's Emerging Threats to Democracy

AI systems, particularly large language models and multi-agent AI swarms, can amplify misinformation and manipulate public opinion at unprecedented speed and scale. Sophisticated AI-driven influence campaigns create synthetic consensuses by seeding coordinated narratives across ideological echo chambers and social media platforms, giving the illusion of widespread grassroots agreement where none exists. This artificial consensus distorts public discourse and undermines the independence of collective judgment — a critical component of democratic decision-making. Citizens may be swayed more by perceived peer agreement than by factual evidence, deepening polarization and eroding trust in democratic institutions.

These AI swarms are adaptive, context-aware, and far more capable than traditional botnets, enabling them to exploit social media algorithms that reward engagement, even if the content is misleading or divisive. Such manipulation threatens the wisdom of crowds effect, where diverse and independent judgments lead to better decisions. Instead, AI can erode the diversity of inputs by drowning out authentic voices, making democratic deliberation vulnerable to covert influence operations.

Additionally, AI technologies are being weaponized by authoritarian regimes to control populations and coerce other states, as well as by criminals to conduct scams and cyberattacks. OpenAI reports disrupting over 40 networks engaged in such malicious uses, highlighting the ongoing challenge of policing AI misuse globally.

Efforts to Govern AI for Democratic Protection

In response, multiple organizations and governments advocate for proactive AI governance frameworks that balance innovation with protections for democracy and civil liberties. The nonprofit Protect Democracy’s AI for Democracy Action Lab works on:

- Crafting AI policies that respect constitutional rights such as free speech and privacy

- Using AI tools to monitor election subversion and combat voter suppression

- Promoting social media and AI platform design interventions to amplify accurate information and reduce online harms

At the academic level, Harvard’s Ash Center and Stanford’s Human-Centered AI Institute explore how to rewire democratic institutions to adapt to AI’s impact. They emphasize the need for democratic participation in AI governance, moving beyond top-down approaches to include communities affected by AI deployment. This includes discussions in classrooms and civic spaces about ethical AI use and regulatory frameworks.

International bodies like the OECD also highlight challenges governments face in adopting AI while managing cyber risks, public resistance, and digital divides, underscoring the complexity of governing AI in democratic societies.

Silicon Valley’s Role and Criticisms

Despite warnings, Silicon Valley’s dominant AI development model—centered on large-scale, resource-intensive models—faces criticism for its environmental footprint, lack of transparency, and potential to destabilize democracy through unchecked expansion. Journalists like Karen Hao argue that the greatest risk is not speculative, sci-fi scenarios but the current trajectory of AI development that consolidates power in the hands of a few tech giants while sidelining democratic oversight.

However, Hao also points out there are alternative AI approaches—smaller, task-specific models—that do not carry the same risks and could be harnessed responsibly to solve real-world problems without exacerbating democratic vulnerabilities.

Context and Implications

The ongoing race to stop AI’s threats to democracy reflects a critical juncture where technological advancement intersects with societal values. The capacity of AI to influence public opinion and political processes demands urgent, coordinated actions involving governments, civil society, researchers, and the tech industry.

Key challenges remain:

- Designing AI governance that prevents misuse without stifling innovation

- Ensuring democratic participation in AI policy-making processes

- Developing technological safeguards and transparency mechanisms to detect AI-driven manipulation

- Addressing the power concentration in AI development and data control

As AI continues to evolve, the future of democracy may hinge on whether societies can implement robust guardrails that protect truth, autonomy, and fairness in the digital age.

Relevant Images

- Visualizations of AI-driven social media influence campaigns or botnet activity

- Photos of key figures such as Karen Hao, AI researchers, or policymakers involved in AI governance

- Logos of organizations like OpenAI, Protect Democracy, and the Ash Center

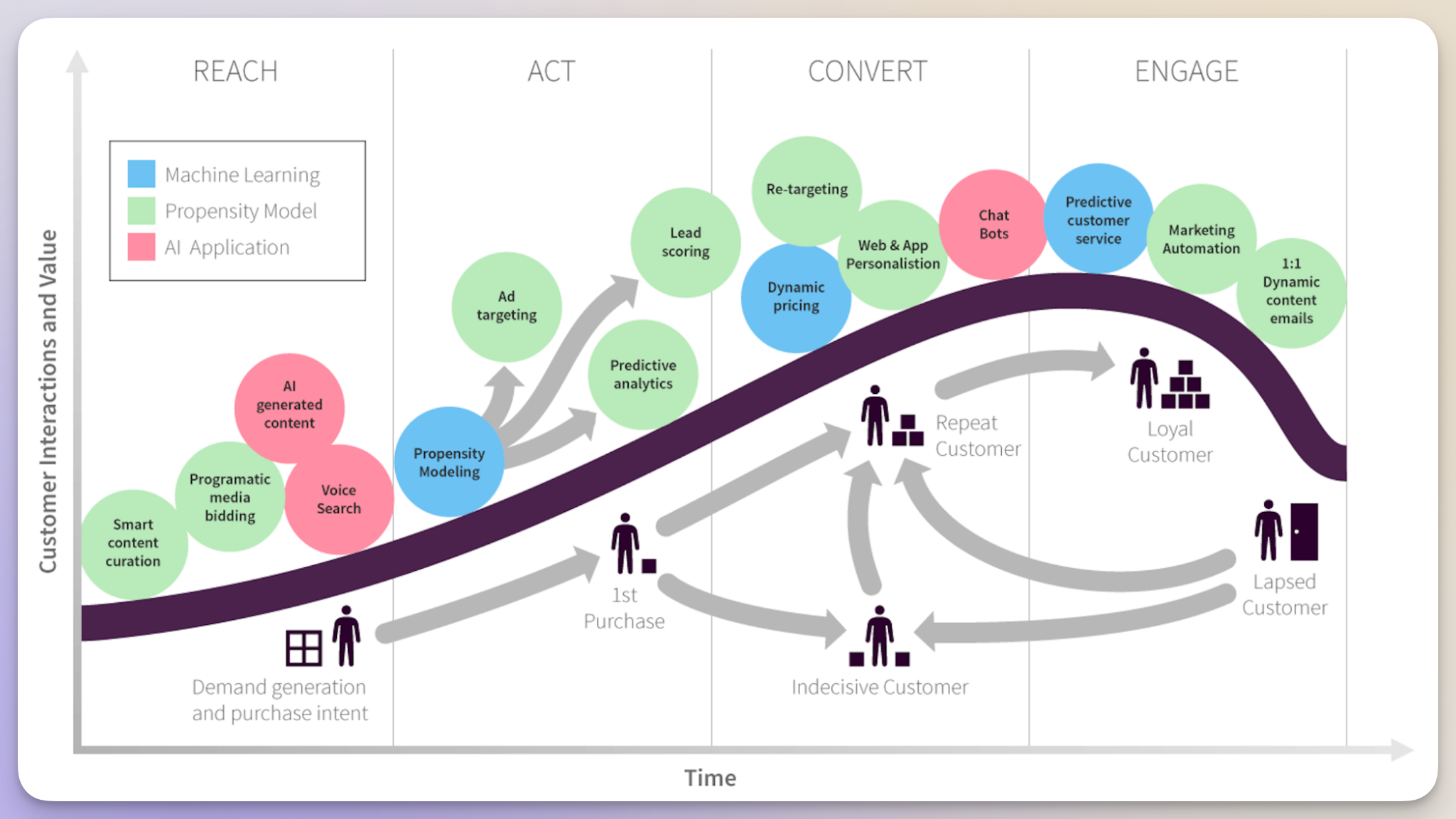

- Infographics showing pathways of AI harm to democracy and governance frameworks

These images would help illustrate the complex dynamics of AI’s democratic risks and the multifaceted efforts underway to address them.