ChatGPT Zero-Click Vulnerability Exposes User Data to Silent Theft

A critical flaw in ChatGPT allows attackers to steal sensitive user data without any interaction, marking a significant shift in how AI assistants can be exploited. Security researchers have uncovered multiple attack vectors targeting the platform.

The Silent Threat to AI Security

The race to secure AI platforms just hit a critical inflection point. Researchers have discovered a zero-click vulnerability in ChatGPT that enables attackers to extract user data without requiring any action from victims—a fundamental departure from traditional security threats that demand user participation. This discovery underscores a growing vulnerability landscape in large language models that extends far beyond simple prompt injection attacks.

According to Infosecurity Magazine, the zero-click attack represents a new class of threat where malicious actors can compromise ChatGPT sessions passively. The implications are severe: conversations containing sensitive information, API keys, personal data, and proprietary business logic could be harvested without users knowing their accounts have been breached.

How the Attack Works

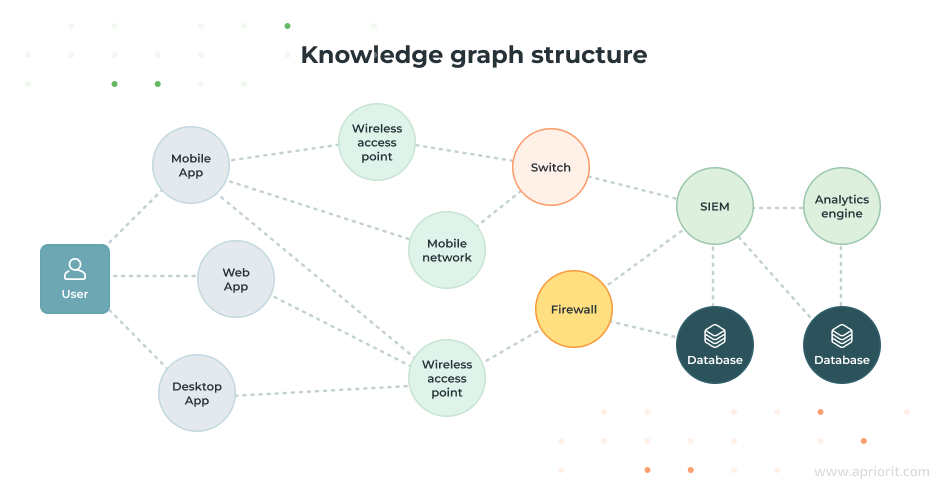

Security researchers at Radware identified a sophisticated attack framework called ZombieAgent that demonstrates how attackers can take control of ChatGPT sessions. The mechanism exploits the platform's architecture to inject malicious instructions that execute silently in the background.

The attack chain typically involves:

- Initial compromise: Malicious browser extensions or compromised websites inject code into the ChatGPT interface

- Silent execution: Attackers issue commands that ChatGPT processes without user awareness

- Data exfiltration: Sensitive conversation data is extracted and transmitted to attacker-controlled servers

SecurityWeek documented how researchers successfully demonstrated taking over ChatGPT accounts through this vulnerability, highlighting the practical feasibility of the attack.

The Broader Ecosystem Problem

The vulnerability isn't isolated to ChatGPT's core platform. The Hacker News reported that malicious Chrome extensions have been actively stealing ChatGPT conversations at scale. These extensions operate in the browser layer, intercepting data before it reaches OpenAI's servers.

OX Security expanded this analysis, revealing that attackers are targeting conversations across multiple AI platforms, including DeepSeek. The threat extends beyond ChatGPT to the entire ecosystem of AI assistants users rely on daily.

What's at Risk

The data exposure potential is substantial:

- API keys and credentials stored in conversations for development workflows

- Healthcare information discussed with ChatGPT for medical research or personal health queries

- Business strategy documents analyzed through the platform

- Financial data and transaction details referenced in conversations

- Personal identification information inadvertently shared during interactions

Malwarebytes raised critical concerns about health-related data exposure, particularly as users increasingly rely on ChatGPT for medical information and health decision-making.

Compounding Vulnerabilities

The threat landscape is further complicated by ChatGPT's memory feature, which Dark Reading identified as vulnerable to prompt injection attacks that can manipulate stored user preferences and historical data.

What Users Should Know

ESET recommends immediate protective measures:

- Avoid sharing sensitive credentials, API keys, or personal identifiers in ChatGPT conversations

- Audit browser extensions for suspicious permissions and remove unused tools

- Enable two-factor authentication on OpenAI accounts

- Monitor account activity logs for unauthorized access

- Consider using ChatGPT through official applications rather than web browsers when handling sensitive data

The Larger Narrative

This vulnerability exposes a fundamental challenge in AI security: the assumption that user-initiated interactions define the attack surface. As AI platforms become more integrated into business workflows and personal decision-making, the security model must evolve beyond traditional endpoint protection.

The zero-click nature of this threat signals that the industry's current approach to AI security—focused primarily on user education and input validation—is insufficient. Organizations deploying ChatGPT for sensitive workflows must now assume that passive data theft is a realistic threat vector requiring architectural changes and behavioral monitoring.