OpenAI's Codex: How Self-Improvement Capabilities Are Reshaping AI-Assisted Development

OpenAI's Codex has evolved from a code completion tool into a system capable of building and refining its own capabilities, marking a significant shift in how AI models approach software development tasks.

OpenAI's Codex: How Self-Improvement Capabilities Are Reshaping AI-Assisted Development

OpenAI's Codex has undergone a fundamental transformation since its initial release, evolving from a straightforward code completion engine into a more sophisticated system capable of autonomously developing and enhancing its own functionality. This shift represents a critical inflection point in how large language models approach software engineering challenges.

The Evolution of Autonomous Capability Building

Codex's journey reflects a broader trend in AI development: models that can introspect and improve their own performance. Rather than relying solely on human-directed updates and refinements, Codex has begun leveraging its underlying capabilities to identify gaps in its functionality and propose solutions. This self-directed improvement mechanism operates alongside traditional model updates, creating a hybrid approach to capability expansion.

The system's architecture now enables it to:

- Analyze its own performance patterns across diverse coding tasks and languages

- Identify recurring failure modes and generate targeted improvements

- Test and validate proposed enhancements before integration

- Adapt to domain-specific requirements with minimal human intervention

Technical Architecture and Implementation

The foundation of Codex's self-improvement lies in its ability to generate code that extends its own capabilities. When the model encounters tasks it cannot adequately solve, it can now produce helper functions, abstraction layers, and optimization routines that enhance its subsequent performance on similar problems.

This represents a departure from earlier code generation models, which operated as static systems awaiting external refinement. Codex's approach creates a feedback loop where capability gaps directly inform the generation of solutions, effectively allowing the model to bootstrap its own improvements.

Practical Implications for Developers

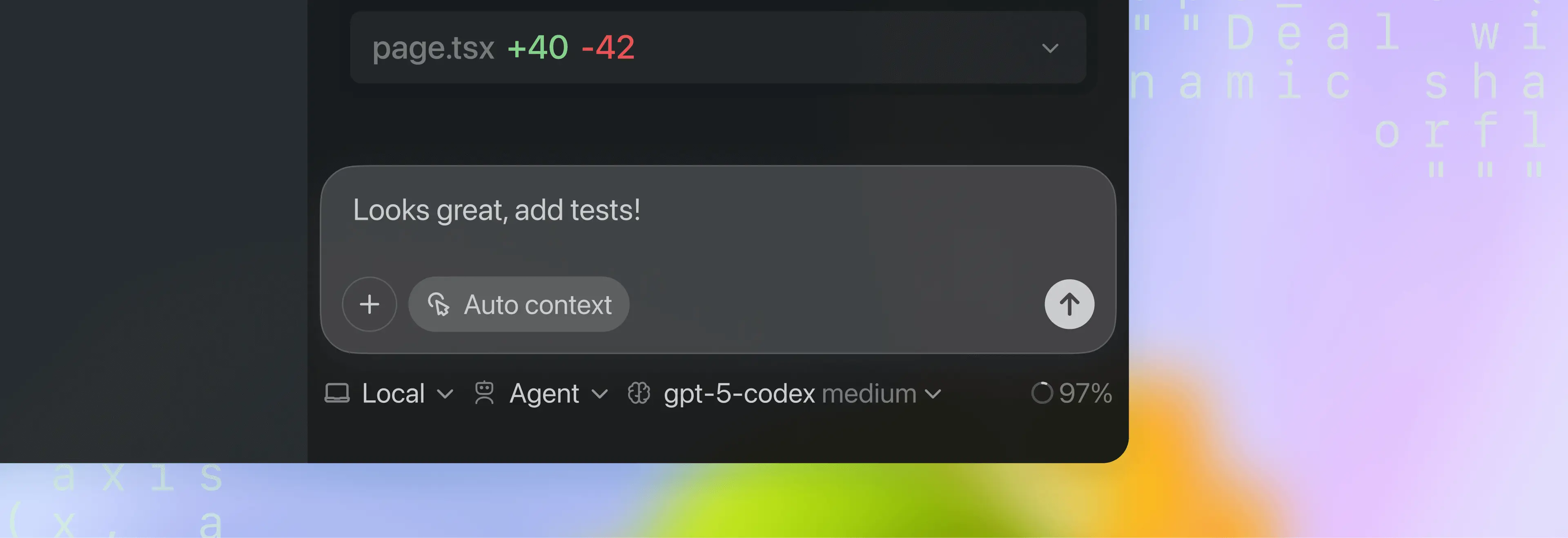

The implications for software development teams are substantial. Developers using Codex through IDE extensions and API integrations now benefit from a system that continuously refines its suggestions based on real-world usage patterns. The model's ability to improve its own capabilities means that the tool becomes more effective over time without requiring developers to adopt entirely new versions or workflows.

Integration points across popular development environments—including Visual Studio Code extensions and web-based interfaces—provide developers with access to these enhanced capabilities in their native workflows. The system's improvements propagate automatically, ensuring that teams benefit from capability enhancements without manual intervention.

Limitations and Open Questions

Despite these advances, significant questions remain about the scalability and reliability of self-improving systems. The challenge of ensuring that autonomously-generated improvements maintain code quality, security standards, and performance benchmarks requires robust validation frameworks. OpenAI has implemented multiple safeguards, but the long-term implications of increasingly autonomous capability development warrant continued scrutiny.

Additionally, the transparency of these self-improvement mechanisms remains limited. Understanding precisely how Codex identifies capability gaps and generates solutions is essential for developers who depend on the system for critical infrastructure work.

Looking Forward

Codex's evolution toward autonomous capability building signals a maturation of code generation technology. As the system becomes more self-directed in its improvements, the relationship between human developers and AI assistants shifts from one of static tool usage to dynamic collaboration. The model's ability to identify and address its own limitations suggests that future iterations may require less frequent major updates while delivering more consistent improvements.

The broader implications extend beyond Codex itself. If large language models can effectively improve their own capabilities, the traditional model update cycle—where human teams identify problems and implement solutions—may gradually transform into a more fluid process where AI systems play a more active role in their own evolution.

Key Sources

- OpenAI Codex Documentation and Changelog

- OpenAI Developer Hub Technical Resources

- OpenAI Research Publications on Code Generation Models