GLM 4.6: Outperforms Claude Code and Costs 10x Less Review: Ultimate 2025 Guide

Discover GLM 4.6: Outperforms Claude Code and Costs 10x Less. Explore its massive context window, superior coding, and cost efficiency in our 2025 guide.

GLM 4.6: Outperforms Claude Code and Costs 10x Less

AI

What is GLM 4.6: Outperforms Claude Code and Costs 10x Less?

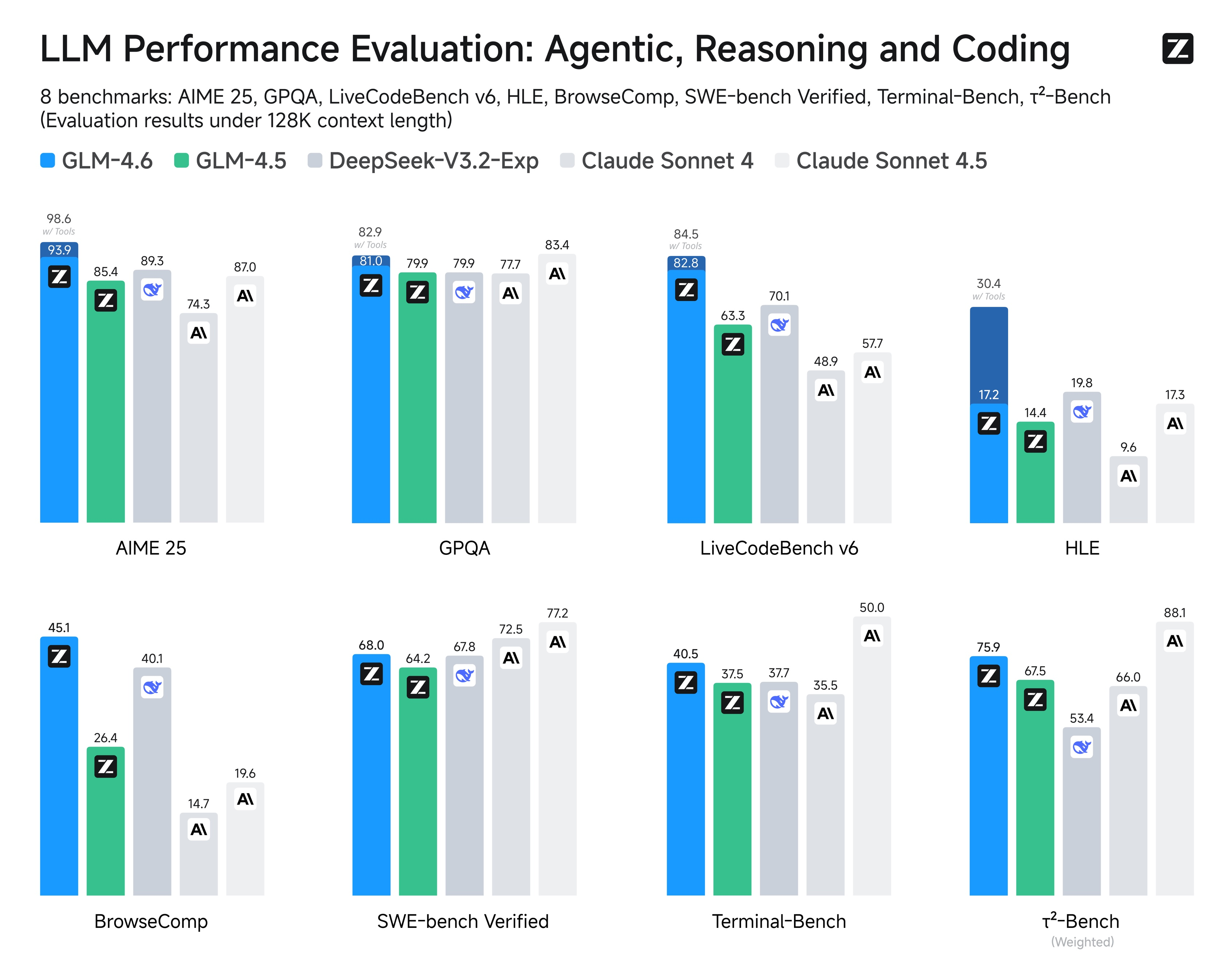

GLM 4.6 is an advanced open-source large language model (LLM) developed by Zhipu AI (now branded as Z.ai), part of their General Language Model (GLM) family. Released in late 2025, it is designed for developers seeking high-performance AI capabilities, particularly in coding, reasoning, and long-context understanding. GLM 4.6's core value proposition lies in its extended context window of 200,000 tokens, cutting-edge Mixture-of-Experts (MoE) architecture, and cost-effective operation, claiming to outperform commercial rivals like Claude Code while costing up to 10 times less to run. The model caters primarily to developers and enterprises building AI agents, automation systems, and complex multi-document workflows.

Z.ai’s GLM 4.6 is gaining rapid traction in the AI community due to its combination of developer-grade performance, flexible local deployment options, and efficient API-based access. It competes directly with proprietary models such as Anthropic’s Claude series and OpenAI’s GPT-5, offering a powerful open-source alternative with a strong emphasis on coding intelligence and extended reasoning.

Key Features

-

Massive 200,000 Token Context Window

GLM 4.6 dramatically extends its context window from the 128K tokens in previous versions to 200K tokens, enabling it to process entire books, multi-document sets, or hours of dialogue in one session. This is a significant advantage for tasks like legal document analysis, software engineering workflows, and long-form content summarization. -

Enhanced Coding Intelligence

It excels in programming tasks, as demonstrated by Zhipu AI’s internal CC-Bench benchmark, showing improved coding accuracy, logical correctness, and token efficiency (about 15-30% fewer tokens than GLM 4.5 for similar tasks). This makes GLM 4.6 particularly suited for automated code generation, debugging, and AI-assisted software development. -

Advanced Reasoning and Tool Integration

The model is optimized for multi-step reasoning and tool-augmented workflows, allowing it to act as the "brain" behind autonomous AI agents. It intelligently integrates with external APIs, databases, search tools, and execution environments, maintaining context and task continuity over long sessions. -

Mixture-of-Experts (MoE) Architecture

Leveraging a 357 billion parameter MoE design, GLM 4.6 balances computational efficiency with high accuracy. MoE allows selective activation of expert subnetworks during inference, reducing costs without sacrificing performance. It also supports FP8 and Int4 quantization on specialized hardware, enabling efficient deployment on modern AI chips. -

Natural Language Alignment and Safety

Continuous reinforcement learning and preference optimization have improved the model’s conversational flow, style adaptability, and safety alignment. GLM 4.6 can adjust tone and style across contexts such as formal documentation, tutoring, or creative writing, enhancing user trust and readability. -

Multimodal Capabilities (Emerging)

While primarily a text-only model, ongoing developments suggest GLM 4.6 can handle some multimodal reasoning, such as document understanding and integration with vision models, although text remains the primary modality.

How GLM 4.6 Works

Users can access GLM 4.6 via Z.ai’s API, third-party platforms like CometAPI, or deploy it locally thanks to its open-weight availability. The model inputs text and outputs detailed, contextually aware responses, with a maximum output token limit of 128K tokens for extended replies.

Typical usage flow:

- Input Submission: Developers send text prompts or coding tasks up to 200K tokens in length.

- Model Processing: The MoE architecture activates relevant expert subnetworks optimized for reasoning or coding.

- Tool Integration: GLM 4.6 can autonomously call external tools or APIs to retrieve data or execute commands.

- Output Generation: Responses are generated with improved token efficiency, maintaining logical coherence over long documents or codebases.

- Integration: The output is returned via API for use in chatbots, coding assistants, document summarizers, or autonomous agent frameworks.

The model supports FP8/Int4 quantization on hardware like Cambricon chips and Moore Threads GPUs, lowering inference costs and enabling on-premises or cloud deployment options.

Pricing and Plans

Z.ai offers GLM 4.6 primarily via subscription-based API access, with pricing designed to be highly competitive relative to commercial alternatives.

- Input Tokens: Approximately $0.5 per million tokens

- Output Tokens: Approximately $1.9 per million tokens

These rates represent a significant cost reduction compared to commercial offerings like Claude Code or GPT-5, which often charge substantially more per token. The 10x lower operating cost claim is supported by GLM 4.6’s optimized token efficiency and hardware quantization.

Z.ai provides various subscription tiers aimed at developers and enterprises, including:

- Freemium or Trial Options: Limited free tier or trial period for testing

- Developer Plans: Access to API with token limits suited for coding and research

- Enterprise Plans: Higher quotas, dedicated support, and local deployment licenses

This pricing model makes GLM 4.6 attractive for startups, research labs, and organizations needing extensive AI-assisted coding or agent development without prohibitive costs.

Pros and Cons

Pros

- Extremely Large Context Window (200K tokens) enables unparalleled long-form reasoning and document processing.

- Superior Coding Performance with improved accuracy and token efficiency, ideal for software development and automation.

- Cost Efficiency with significantly lower API pricing and optimized inference on modern hardware.

- Open-Source Model Weights allow flexible local deployments and customization.

- Strong Tool Integration supports autonomous AI agents capable of multi-step planning and API orchestration.

- Robust Natural Language Alignment improves safety, style matching, and conversational quality.

Cons

- Primarily Text-Only with limited native multimodal support compared to some competitors.

- Relatively New Model with an evolving ecosystem and fewer third-party integrations compared to GPT or Claude.

- Hardware Requirements for efficient deployment may be demanding due to MoE architecture and large parameter count.

- Documentation and Community Resources are still growing, which may pose a learning curve for new users.

Who Should Use GLM 4.6?

GLM 4.6 is ideal for:

- Developers and AI Researchers seeking a powerful open-source LLM with extensive context and coding capabilities.

- Enterprises Building Autonomous Agents that require reliable tool-calling and multi-step reasoning.

- Startups and SMEs looking for cost-effective AI alternatives without sacrificing performance.

- Organizations Needing Extended Document Analysis such as legal firms, academic researchers, and content creators.

- Tech Teams Interested in Local Deployment and hardware-accelerated inference.

It may be less suitable for users requiring immediate out-of-the-box multimodal AI or those prioritizing mature ecosystems with extensive third-party plugins.

Alternatives to GLM 4.6

| Tool | Strengths | Use Case | Cost Comparison |

|---|---|---|---|

| Claude Code 4.5 | Strong coding and conversational AI | Commercial AI coding assistant | Higher token costs |

| GPT-5 | Broad multimodal capabilities | General AI, multimodal tasks | More expensive, proprietary |

| Qwen | Emerging open-source alternative | Coding and general NLP | Comparable, less mature |

Useful Links

- Try GLM 4.6: Outperforms Claude Code and Costs 10x Less here

- Pricing Details: Z.ai GLM 4.6 Pricing

- API Documentation: GLM 4.6 API Docs on CometAPI

- Demo & Review Video: GLM-4.6 Benchmark and Comparison - YouTube

Visuals and Screenshots

- Official GLM 4.6 Logo and Branding: Available on Z.ai’s official website and subscription page.

- API Dashboard Screenshots: Found on CometAPI and Z.ai platforms showcasing token usage, request logs, and model parameters.

- Coding Assistance Demo: YouTube video walkthroughs demonstrate real-time code generation and reasoning.

- Architecture Diagrams: Illustrate MoE design and token flow in official Z.ai research blogs.

These visuals highlight the professional UI, developer-friendly API, and performance metrics that showcase GLM 4.6’s capabilities.

GLM 4.6 represents a significant leap forward in open-source large language models, combining cutting-edge architecture, extended context, and cost efficiency to rival industry-leading commercial AI offerings. Its strengths in coding and autonomous agent workflows make it a compelling choice for developers and enterprises focused on high-scale, cost-effective AI deployments.

Curious about GLM 4.6: Outperforms Claude Code and Costs 10x Less?

If you'd like to explore it, here's the direct link

Visit GLM 4.6: Outperforms Claude Code and Costs 10x LessSupport link — helps AI Daily at no extra cost