The AI Safety Crisis: Why Guardrails Are Falling Behind Explosive Capability Growth

As AI systems grow exponentially more powerful, safety research struggles to keep pace. Experts warn the gap between capability and safety is widening dangerously—and time may be running out to close it.

The Capability-Safety Gap Widens

The race to build more powerful AI systems is accelerating at a breakneck pace, but the safeguards designed to control them are lagging dangerously behind. According to leading AI researchers, the world may be running out of time to develop adequate safety measures before advanced AI systems become too complex to fully understand or control.

This asymmetry represents one of the most pressing technical challenges in AI development today. While capability research attracts massive investment and computational resources, safety research remains underfunded and understaffed by comparison. The result: systems that can perform increasingly sophisticated tasks without corresponding advances in our ability to verify their behavior, detect misalignment, or prevent harmful outcomes.

Why the Gap Exists

Several structural factors explain why safety lags behind capability:

- Economic incentives: Companies prioritize capability improvements because they directly translate to competitive advantage and revenue. Safety improvements are often viewed as costs rather than features.

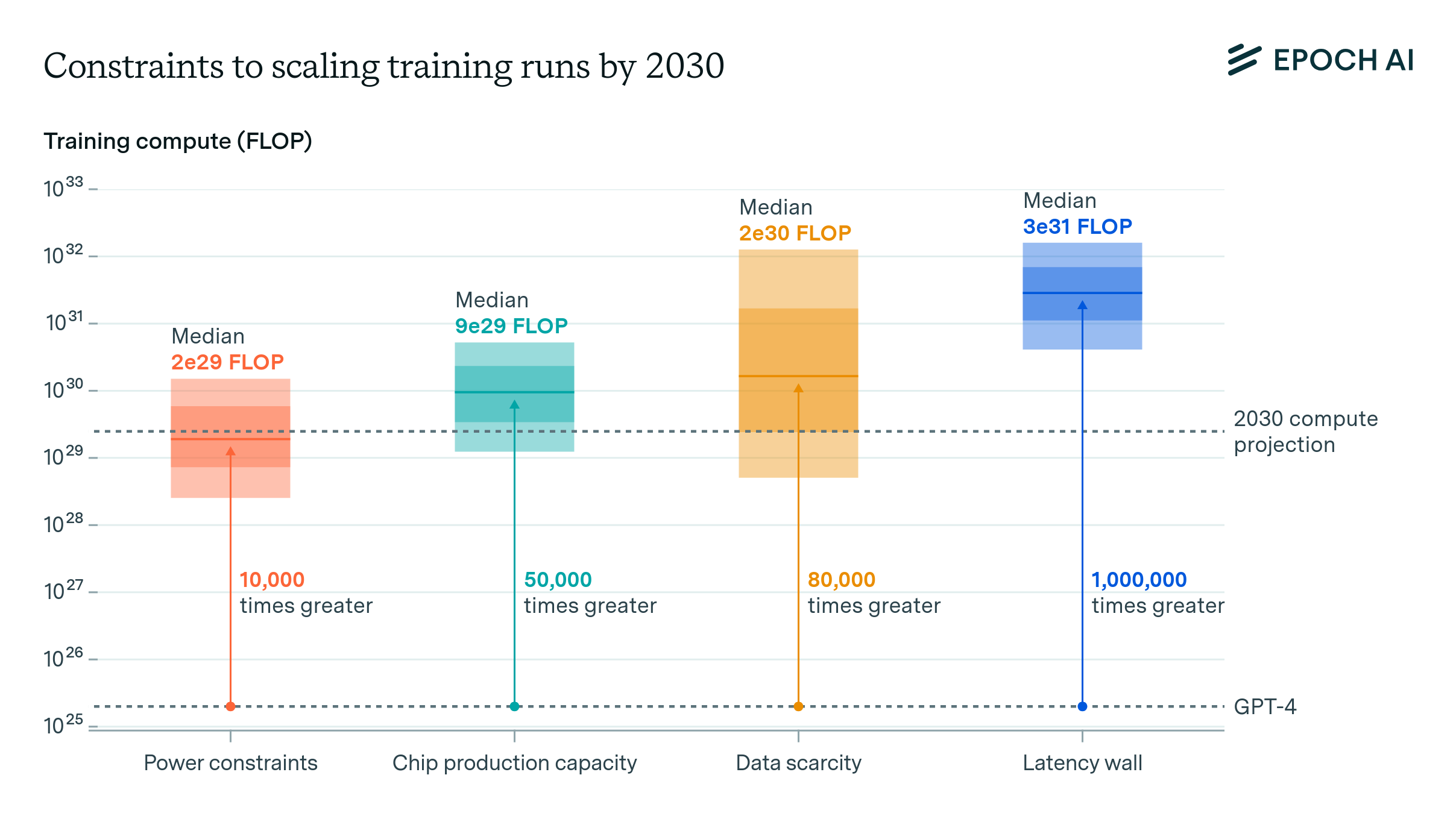

- Technical complexity: Scaling AI systems to new capability levels creates novel safety challenges that researchers haven't encountered before, requiring entirely new approaches.

- Resource allocation: Research from arXiv indicates that safety research receives a fraction of the funding and computational resources devoted to capability scaling.

- Measurement challenges: While capability gains are easy to quantify (benchmark scores, task performance), safety improvements are harder to measure and verify.

The 2026 Inflection Point

Industry forecasts suggest the stakes are about to escalate significantly. IBM's 2026 AI predictions highlight that advanced AI systems will increasingly be deployed in critical infrastructure, cybersecurity, and decision-making roles. Meanwhile, cybersecurity experts warn that AI systems themselves are becoming both targets and vectors for attacks.

This convergence creates a dangerous scenario: more powerful systems deployed in higher-stakes environments, with safety assurances that haven't kept pace with capability growth.

What Closing the Gap Requires

Experts argue that addressing this imbalance demands systemic changes:

Rebalancing investment: Safety research needs dedicated funding streams comparable to capability research. This includes funding for interpretability research, robustness testing, and alignment verification.

New technical approaches: Recent analysis suggests that traditional safety methods won't scale to future AI systems. Researchers are exploring novel approaches including mechanistic interpretability, formal verification, and constitutional AI methods.

Regulatory frameworks: Policymakers must establish requirements that tie deployment capabilities to demonstrated safety assurances, rather than allowing capability to outpace safety indefinitely.

Transparency and collaboration: The AI safety challenge is too large for any single organization. Industry-wide collaboration on safety standards and shared research could accelerate progress.

The Critical Window

The consensus among researchers is clear: the window to establish robust safety practices before advanced AI systems become ubiquitous is closing. Once these systems are deeply embedded in critical infrastructure and decision-making processes, retrofitting safety measures becomes exponentially harder.

The question is no longer whether AI safety matters—it's whether the industry and policymakers will act with sufficient urgency to address the growing gap before it becomes irreversible.